AI for Astronomy

Meet the Department Women In STEM 21st October 2021

This week’s blog comes from Alexandra Bonta and Devina Mohan, MSc students working with Professor Anna Scaife on Bayesian deep learning for classification of pulsars and radio galaxies, and follows on from our blog post for Ada Lovelace Day.

Last week we celebrated Ada Lovelace Day and heard about the scientists who inspire our staff and students. Ada Lovelace was a true visionary and one of the first people to realise the potential of computers, even before computers had been invented. She not only translated L. F. Menabrea’s paper on “Sketch of the Analytical Engine invented by Charles Babbage”, but also expanded that with extensive notes. In her analysis, she suggested that in addition to performing operations on numbers, the analytical engine could also be used to create associations of various complexity, similar to those used to compose music for example:

“Again, it might act upon other things besides number, were objects found whose mutual fundamental relations could be expressed by those of the abstract science of operations, and which should be also susceptible of adaptations to the action of the operating notation and mechanism of the engine. Supposing, for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent.”

Although the analytical engine was never built, computers these days are powered by powerful processing units made of millions of transistors that use zeroes and ones to represent a multitude of abstract relations.

Ada also wanted to work on a “calculus of the nervous system” to understand how what goes on in the brain translates to thoughts and feelings. While we are yet to understand completely how the human brain works, neurons in the brain loosely inspired the invention of the perceptron algorithm in 1958. This algorithm could learn from data and make a binary classification of the input. Over the years, more sophisticated algorithms were developed that could model complex patterns. These algorithms are broadly known as machine learning (ML), since they allow algorithms to discover patterns in data without being explicitly programmed to do so. This has given rise to exciting applications in self-driving cars, humanoid robots and self-planning rovers that roam Mars.

We work at the intersection of astronomy and machine learning. Astronomy is an ever expanding field, with an impressive volume of data being collected every day, from radio telescope observation all over the world to interstellar probe data. Historically, in order to process all this data, time consuming analysis by hand had to be implemented. Things got easier with the arrival of computers, with complex calculations being easily handled by these machines. More recently, ML methods are being adopted by astronomers to facilitate research. In this blog we discuss the application of deep learning models, which are a subset of ML, to two subfields of astronomy.

Image of the pulsar in the centre of the Crab Nebula. Composite image from Hubble optical imaging and Chandra X-ray imaging.

Pulsars

When giant stars explode at the end of their lives, an event known as a supernova, their cores collapse, becoming what is known as a neutron star. Neutron stars are very small and dense stars, with radii varying between 8 and 20 km and about 1.5 times heavier than the Sun. Pulsars are neutron stars with very strong magnetic fields, which emit jets of radiation at the poles . The radiation then flashes past the Earth each time the pulsar rotates and when that happens the star appears brighter when observed using a radio telescope. This is known as the light-house effect, and it gives the pulsar’s observed brightness graph (known as pulse profile) its ‘pulsed’ look.

Lighthouse effect of a rotating pulsar. The radiation jets can be seen passing the Earth every time a pulsar spins around its rotation axis, which is usually every couple of seconds. Animation by Michael Kramer.

As pulsars are very far away and sometimes quite faint, their signals are very easily confused with other types of radio signals, such as interference from satellites and objects from our Solar System and noise. Because of this, we need to carefully examine pulsar candidates in order to confirm an actual discovery. Traditionally, this has been done by analysing data by hand. This is a very time consuming process and, in the case of large scale sky surveys, can become an impossible task due to the volume of the data. Deep learning classifiers are incredibly powerful tools, able to sort massive data sets at an incredible pace and with very good accuracy and precision.

Radio Galaxies

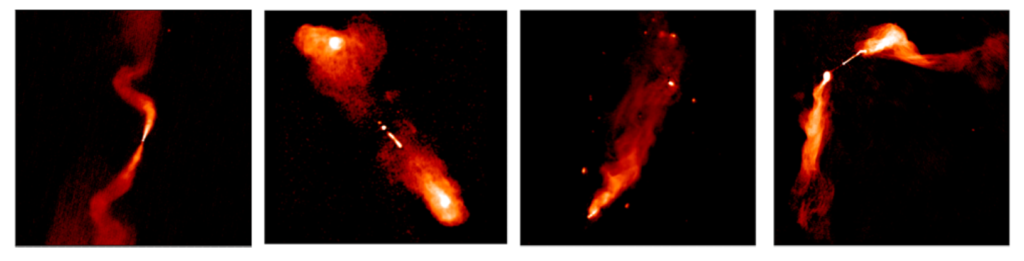

Most galaxies in the universe host a supermassive black hole at their center. Galaxies powered by matter accreting onto the central black hole emit radiation across the electromagnetic (EM) spectrum and are known as Active Galactic Nuclei (AGN). Radio galaxies are a subpopulation of AGN that emit predominantly in the radio regime of the EM spectrum due to interaction between electrons travelling near the speed of light and magnetic fields. These galaxies are characterised by large scale jets that can extend to mega-parsec distances beyond the center (1 megaparsec = 1019 kms). Several extrinsic and intrinsic factors give rise to different morphologies and understanding how populations of radio galaxies are distributed gives us insight into how these galaxies evolve over time. However, there is still a continuing debate about the exact interplay between extrinsic effects, such as the interaction between the jet and the environment, and intrinsic effects, such as differences in central engines and accretion modes, that give rise to the different morphologies.

Different morphologies of radio galaxies (Image credit: Hardcastle & Croston, 2020)

Future radio telescopes with improved sensitivity and resolution will help us answer many research questions in this field. The large data volumes expected from these telescopes make the use of deep learning methods inevitable. However, most deep learning models make overconfident predictions and are unable to quantify the amount of uncertainty in model parameters and predictions. This makes it difficult to use their outputs for scientific analysis. The use of more statistically robust deep learning models based on Bayesian inference is one possible solution to this problem.

AIastronomyastrophysicsphysicsuniverseWomen in Physicswomen in scienceWomen in STEM

Leave a Reply